More than thirty years ago, John Naisbitt put into words a feeling many of us recognise today in “Megatrends,” saying, “We are drowning in information but starved for knowledge.” This statement is incredibly relevant in today’s world, filled to the brim with data for research, analysis, and making decisions. The job of pulling and refining data from questionnaires is key, as these are so many insights and so much valuable feedback.

However, this task comes with its set of problems. The wide range of data types (from fixed-choice answers to free-text replies) along with the sheer amount of information and the need for precision, creates big challenges. These issues can make the process less efficient and lead to mistakes that lower the value of the data collected.

But, it’s not all doom and gloom. Making the process of data extraction and processing from questionnaires more efficient is definitely possible. We understand the roadblocks developers and researchers encounter in this field. That’s why, today, we shine a light on successful strategies for handling questionnaire data so you can adapt a more efficient, accurate, and streamlined approach.

Let’s go through these strategies together. Get ready to turn challenges into chances for creativity and better research quality.

Pulling data from questionnaires is an important step in transforming the gathered responses into actionable insights. This involves handling both structured and unstructured data.

Structured data is the kind that fits neatly into categories, such as the choices selected in a multiple-choice question. On the flip side, unstructured data includes the free-text responses where participants express their thoughts in their own words.

Data can be collected in several ways – these ways each reflect the unique methods by which questionnaires are shared and completed. For instance, paper forms are a classic approach that requires participants to mark their answers physically. These responses then need to be manually keyed into a digital system for analysis – a process that might take a bit of elbow grease but is key for some research types.

PDFs serve as another popular method, particularly for surveys that are shared electronically that respondents print out, fill in, and send back. Similar to paper forms, transforming these PDFs into analysable data often involves scanning and the magic of Optical Character Recognition (OCR) technology (we’ll talk about this a bit more later on).

The adoption of online survey tools has transformed data collection within the last few decades. How so? By enabling the direct digital capture of responses (which is much faster and easier for everyone involved). With an estimated 15 million active survey users sending over 3 million online survey invitations daily through SurveyMonkey alone, there is a huge number of digital surveys being filled out every day.

Each method comes with its own downsides and perks, yet the end goal is always the same: to efficiently compile and prep the data for deeper processing and analysis. No matter which type of data you’re dealing with, understanding these fundamentals is your first move towards mastering data management.

Picking the right set of tools and approaches for pulling data out of questionnaires is vital for the reliability and smooth running of your study. Among the many options out there, Optical Character Recognition (OCR) and Application Programming Interfaces (APIs) stand out for their utility.

The OCR market was worth $12.56 billion in 2023 and is expected to grow rapidly, at a rate of 14.8% yearly until 2030. This shows how much people are using and investing in OCR technology. Okay, you might say. But what is OCR?

Optical Character Recognition turns physical papers or PDFs into a form that digital systems can understand. Imagine scanning a stack of filled-out surveys and having them quickly converted into editable text. This technology shines in digitising responses from paper-based or PDF questionnaires. It shifts them swiftly from collection to analysis phase. The success of OCR technology, though, relies on the clarity of the original documents and its ability of correctly reading varied handwriting.

Application Programming Interfaces (APIs) are the bridge for data between online survey platforms and your analysis tools. They’re like a direct line, automating the flow of responses straight into your database or software for crunching numbers. This automation cuts down on manual input and the errors that come with it. While APIs are great for managing structured data, they also bring unstructured feedback under control – by sorting it into a tidier format.

Choosing the best tool is more than a matter of preference – it’s about what fits the nature of your questionnaires, the kind of data you’re after (structured or unstructured), and the demands of your project.

After pulling data from questionnaires, we move onto the essential steps of processing and tidying it up. It’s a bit like getting your data to take a bath and dress up properly before it steps out into the world of analysis. Let’s walk through what this makeover involves:

Why bother with all this? Well, just like you wouldn’t want to base your decisions on hearsay, you wouldn’t want to rely on messy data. Clean and well-processed data are the foundation of trustworthy analysis and insights. Skipping out on these steps is like heading out with your shirt inside out — you might not notice the mistake right away, but it’s bound to lead to some awkward moments. Investing a bit of time here sets you up for a smoother ride down the analysis road, ensuring the conclusions you draw are solid and reliable.

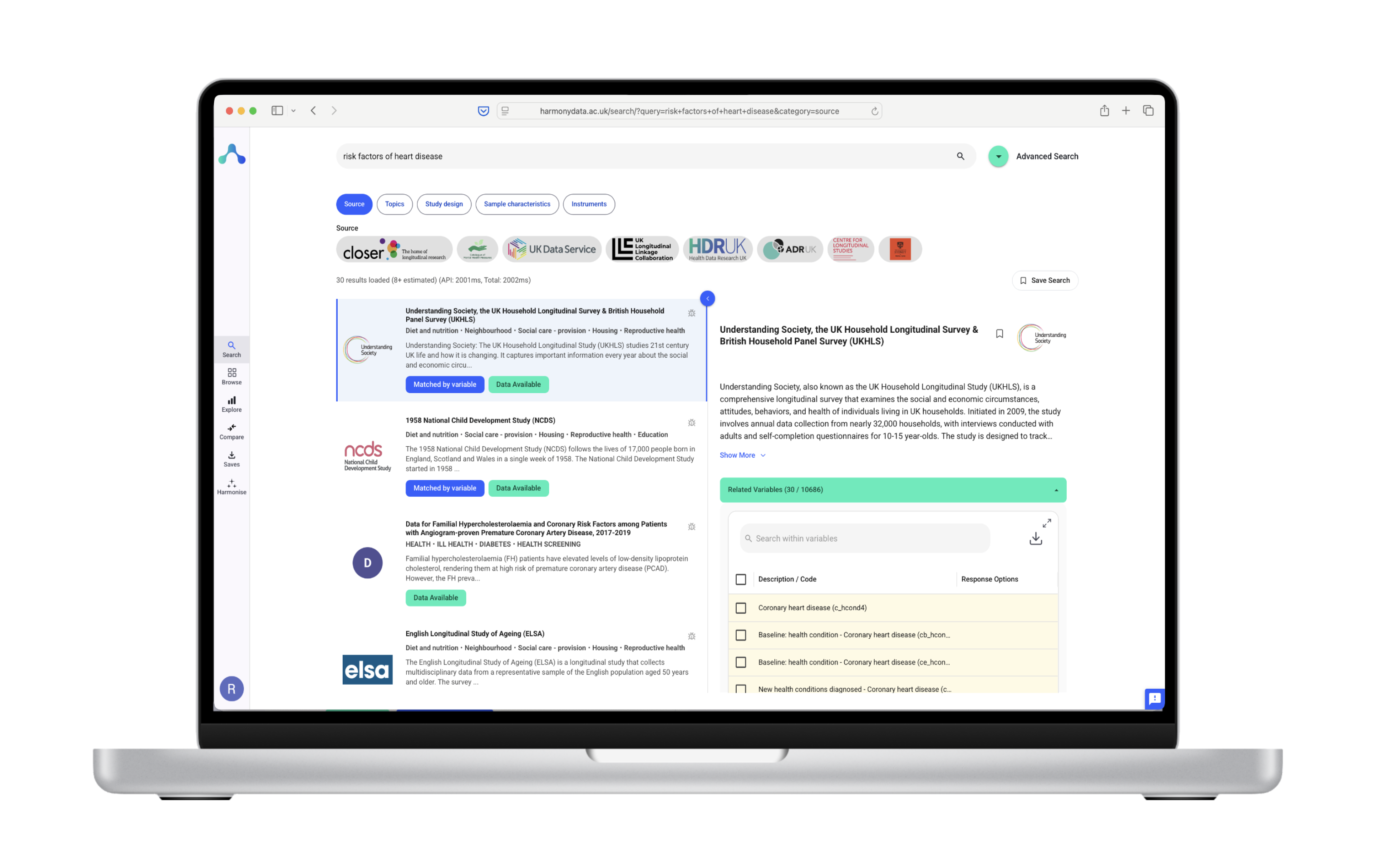

Seems like a lot of work? A customised method can greatly ease and improve the process. This is where we want to tell you about our favourite (and very own) tool: Harmony.

Harmony revolutionises the way we approach the complex task of harmonising questionnaire data. With its advanced Natural Language Processing (NLP) at the core, Harmony offers a tailored solution that excels in interpreting, comparing, and integrating data across languages and formats, making it invaluable for international research and projects with diverse data sources.

But the real charm of Harmony lies in its ability to:

Harmony isn’t just another tool; it’s a comprehensive ally for developers and researchers striving for meticulousness and efficiency in their endeavours. With features like:

It makes data extraction and processing so much easier!

Let us tell you about a real-life success story, because let’s be honest, don’t we all love those?

The Australian Data Archive (ADA), much like its counterpart in the UK, is filled with data spanning public opinions on housing, political viewpoints, and in-depth surveys on employment and health. But they needed our help to make sense of their data…

The challenge: Before Harmony came into the picture, the ADA faced a mountain of survey questions needing harmonisation. This task was filled with inconsistencies that risked the integrity of data across various studies.

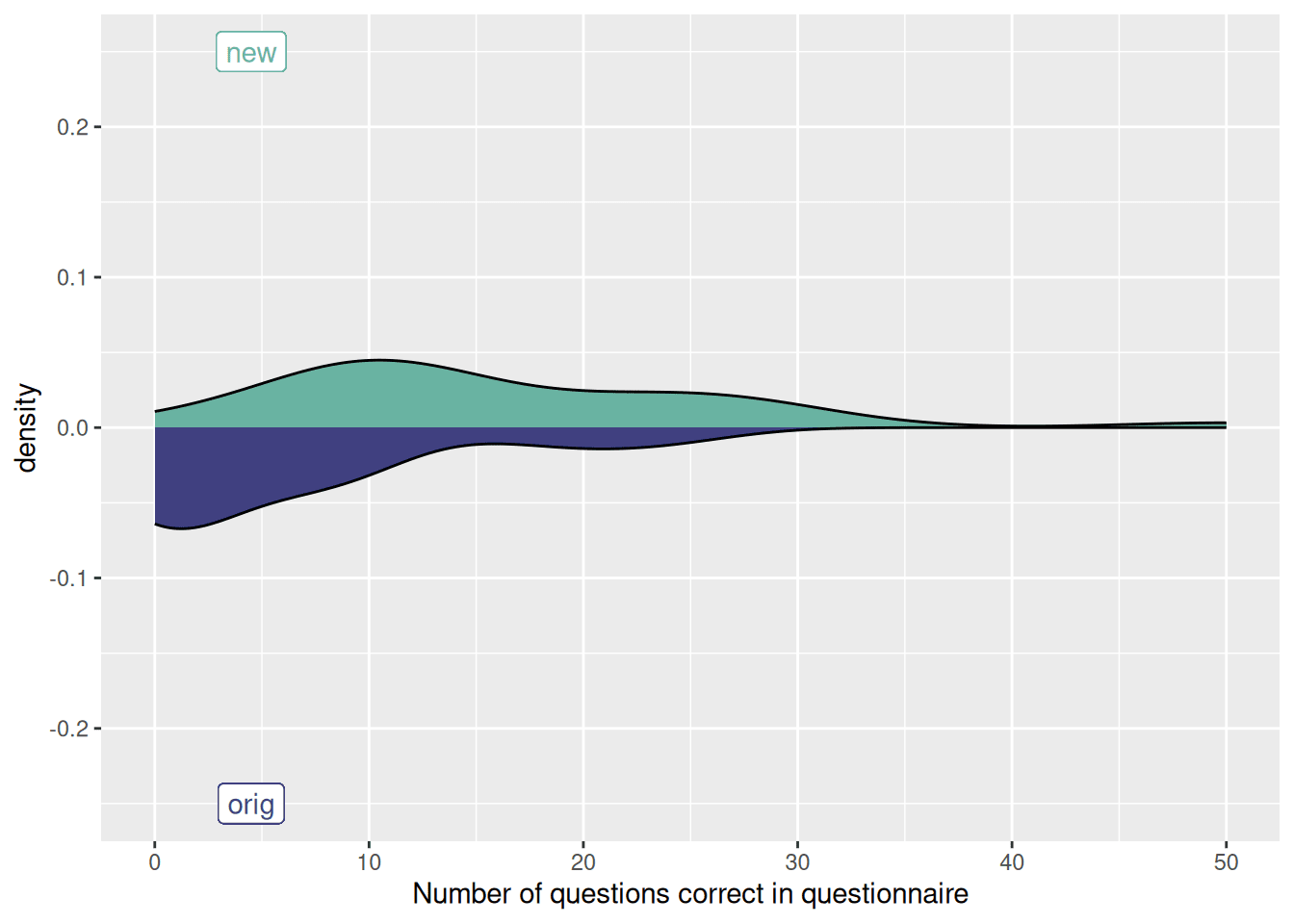

A new approach with Harmony: In search of a streamlined process, the ADA found its solution in Harmony. With Harmony’s skill of grouping questionnaire items by their meaning, a ray of hope shone on the ADA’s challenges.

The improvements that were made with Harmony’s help were impressive:

By bringing in Harmony, the ADA revolutionised its approach to managing survey data and enhanced both the process and the potential for discovery.

If you’re interested, you can read more about this case study here. And if you’ve become curious and would like to get inspired by the many other ways you can use our tool, check out our blog on 10 data harmonisation examples that move businesses and organisations forward. You will find many data harmonisation examples and uses that you might not have thought about.

Chip and Dan Heath once noted, “Data are just summaries of thousands of stories – tell a few of those stories to help make the data meaningful.” Our goal should be to turn raw data into stories that increase understanding and encourage action.

A thought-provoking piece of information from a NewVantage Partners survey points out that merely 24% of executives see their companies as driven by data. This shows us that there’s a bi difference between gathering data and actually using it – a void that Harmony strives to fill by boosting data quality and making it work seamlessly together.

Our advice: Give Harmony a try. It helps make the harmonisation journey smoother and also amplifies the quality and practicality of your data. Start with a review of how you currently manage data and think about how incorporating sophisticated harmonisation tools might lessen the grunt work, enhance uniformity, and help you get richer insights from your datasets.

If you’re a developer interested in learning more about the process of starting to use Harmony, take a look at our developer guide for more information on that topic.

Let’s not stop at just amassing data. Instead, let’s dig into it for the compelling tales it holds, using forward-thinking tools to shape this information into wisdom that guides decisions, sparks change, and forges paths forward.

Got questions? We hear you! Here are our answers to some common concerns surrounding data harmonisation, data extraction and data processing:

Data standardisation is about converting data to a common format and making sure everything follows the same rules or standards, like changing dates to a single format (DD/MM/YYYY). On the other hand, data harmonisation takes this a step further by aligning different datasets, so that they can work together seamlessly. It’s about making sure different pieces of data can “talk” to each other, even if they come from varied sources. We hope this answered your confusion about data standardisation vs harmonisation.

Data harmonisation is a process focused on making data from different sources compatible for analysis or use. MDM, however, is a broader strategy that ensures the entire organisation uses consistent, accurate, and up-to-date master data. While data harmonisation is a task within the MDM framework, MDM encompasses a wider range of data governance, quality, and management practices.

Harmonising data involves several steps: identify the data to be harmonised, understand the format and structure of each source, and then apply transformations to align the data. Tools like mapping and matching are used to find common elements, and adjustments are made to resolve discrepancies in units, formats, or categorisations. The goal is to create a unified dataset that accurately represents information from all sources.

While both processes aim to make data from different sources work together, data harmonisation is about making sure the data is compatible and consistent across datasets. Data integration focuses on combining data from multiple sources into a single, accessible repository. Harmonisation ensures quality and uniformity in the combined data, while integration is about the actual merging and storage of data.

Extracting and collecting data from a questionnaire involves designing the survey to capture the necessary information, distributing it to your target audience through methods like online platforms, paper forms, or interviews, and then gathering the responses. Digital tools can help automate the collection and initial sorting of data, especially for structured questions. For open-ended responses, qualitative analysis might be needed to understand the insights fully.

Processing a questionnaire starts with cleaning the data to remove any errors or irrelevant answers. Then, you categorise responses, especially for open-ended questions, to identify common themes or patterns. For quantitative data, statistical analysis can reveal trends and relationships. The entire process involves preparing the data for analysis, analysing it to draw conclusions, and then reporting the findings in an understandable way. This allows you to transform raw data into meaningful insights.

Meta Title: Maximising Data Insights with Harmony: The Future of Questionnaire Analysis

Keywords: