Organisations typically collect data from multiple sources and for many different reasons. This data comes in various forms and formats – for example, it may be coming from market research, customer research or inter-organisation departments.

Data harmonisation, an advanced technique used to make sense of all the raw data collected and uses for research purposes, becomes necessary in this case, but unless it is incorporated effectively, organisations might miss the full, holistic view of business performance that they wish to gain.

So, if the data is not harmonised using the proper tools and frameworks, for example, organisations are bound to miss some very critical data pieces which will indefinitely affect business performance at some point. Plus, management might miss potential opportunities as the data (at this point, before the harmonisation) is in disparate forms and quite widespread.

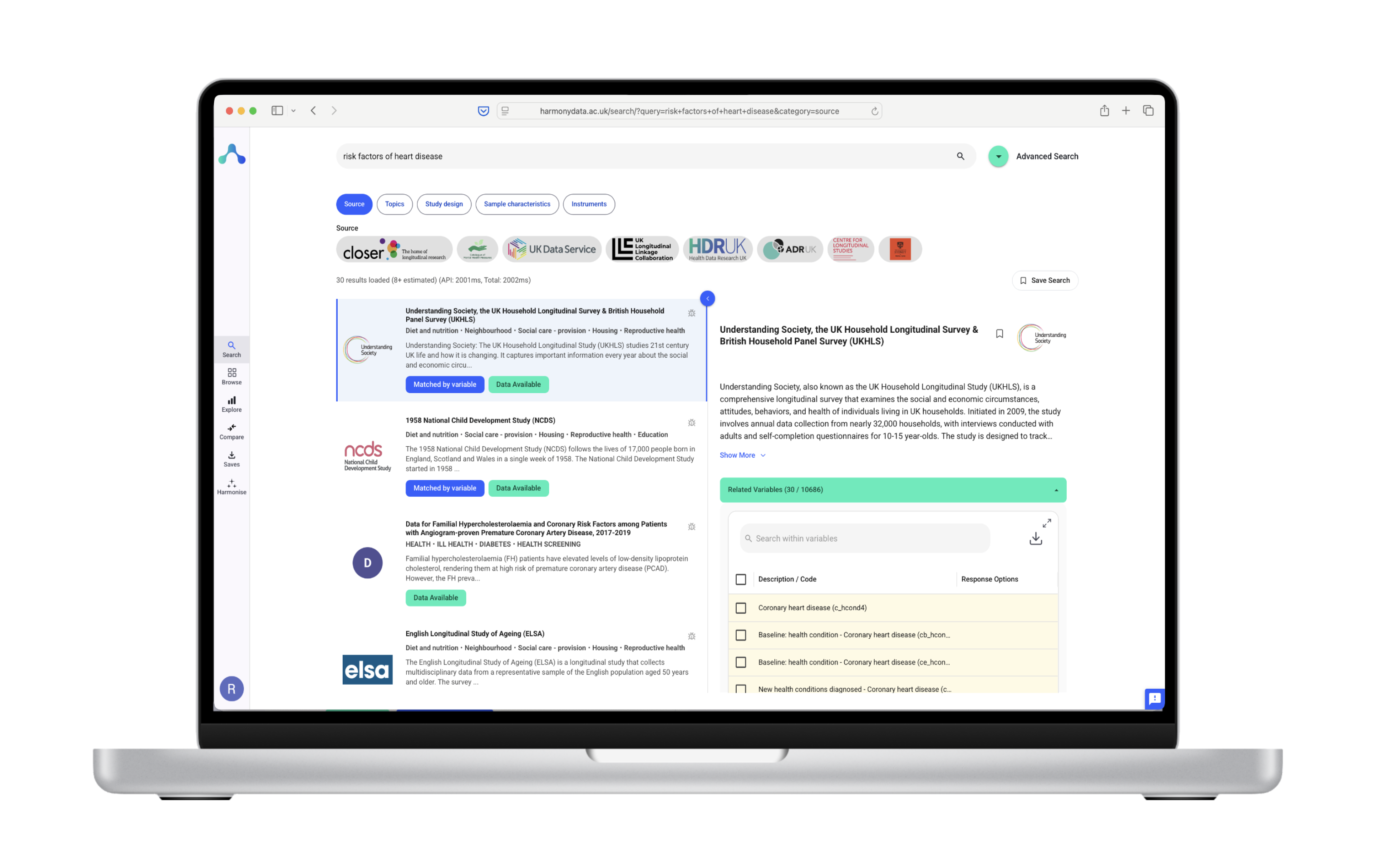

Harmony is a data harmonisation tool which has been developed for cases where datasets have variables with differing names, for example disparate questionnaires with questions in different formats.

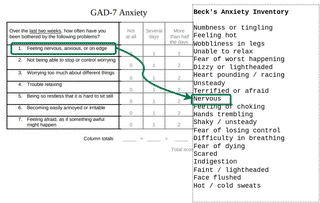

If you collected data using two questionnaires, such as GAD-7 and Becks Anxiety Inventory as in the below image, you would need to harmonise the datasets, by identifying correspondences between variables (the arrow in the image)

Data harmonisation of GAD-7 and Becks Anxiety Inventory - this is what a data harmonisation tool such as Harmony would produce.

Here’s a video of how the data harmonisation tool Harmony works:

With the data harmonisation frameworks and especially the right data harmonisation tools in your arsenal, more informed organisational decision-making can be supported as well as data processing that’s far more efficient. This means that business decisions will ultimately be more accurate and reliable, whilst the quality of the business data on which those decisions are made is also enhanced. Since the now processed and harmonised data is up to date, your organisation is already in an agile state and ready to respond to market changes.

As mentioned at the beginning, organisations must take data from a variety of sources although these sources may not be the same every single time. They must then process it which in itself can be a cumbersome, time-consuming and often complex process where the chances of inaccuracies can be high.

However, with a robust data harmonisation process supported by the appropriate tools and frameworks, an organisation’s data can be quickly centralised and up to date. Naturally, less time will be required to index, verify, and track it, thus, enabling swift decision-making according to shifting consumer demand, for example, or evolving market conditions.

Effective data harmonisation is all about using the right mix of manual and automated techniques. At times, more manual techniques may be needed and vice-versa, depending on your business needs, although both need to be incorporated.

To make data harmonisation at automated process, for instance, AI and a technician’s skills must be collaboratively used. AI, in fact, can be very useful when it comes to data harmonisation as the probability of errors can be greatly reduced along with processing data at a much faster rate.

Before we can start to use the various data harmonisation frameworks or tools, we need to understand this from the outset: the important of establishing an institutional mechanism for managing data once it is harmonised. A common mechanism is needed, after all, which is easy to manage and update, and one which can also be easily understood by everyone – from the data technicians themselves to management, directors, CEOs and stakeholders.

Additionally, the data model needs to be “smart” – that is, it is able to easily meet ongoing and future demands, so it doesn’t have to be changed every time, but rather, requires only a few changes or tweaks before being deployed. This will help your business perform at a consistent pace.

Finally, before kicking off data harmonisation, the key objectives must be established. This means, clearly defining what the business objectives are and the underlying requirements for using data harmonisation. We will expand on this a bit more towards the end of the article.

So, in short, understand the purpose and specific outcomes you want with the harmonised data.

We can then discuss some of the specific data harmonisation tools and frameworks you should be using in 2024:

As you may already know, data harmonisation refers to a process where disparate data of varying formats, sources and types is integrated via certain tools and frameworks, in order for businesses to make key decisions.

Data harmonisation in the context of research, for example, refers to an organisation’s ability to connect with external data-based clinical and translational research projects, where patient data across multiple institutions and often different parts of the world (with different languages being spoken) is involved.

Before we get on with the best tools and frameworks to use for data harmonisation, we should quickly understand the distinction between data harmonisation and data standardisation. While both are used to achieve the same goal (data homogeneity) – the former revolves around data consistency while the latter is about data conformity.

The Cambridge Biomedical Research Centre based in the UK has a very good definition in this regard:

“Standardisation refers to the implementation of uniform processes for prospective collection, storage and transformation of data. Harmonisation is a more flexible approach that is more realistic than standardisation in a collaborative context. Harmonisation refers to the practices which improve the comparability of variables from separate studies, permitting the pooling of data collected in different ways, and reducing study heterogeneity.”

A federated query lets one coordinating group publish an executable algorithm (case and control cohorts typically) using analytics, which can then be independently executed by international consortium members.

The federated query method ensures that no patient-level data is handled outside the organisation, thus, preserving its confidentiality and obviating disclosure. While this is what it does at the basic level, other, more complex federated query models can specify an entire matrix of covariates, tabulations or features – a benchmark in the sequence to come up with a parameter estimate and variance – as well as a merger of matrices to arrive at more robust meta-analyses.

Since the federated query system only returns cell aggregate sub-totals in the matrix, patient-level data stays in the organisation and never gets exposed.

When it comes to the widespread adoption of EHRs (electronic health records) and the need to reuse clinical data through clinical care, claims, and environmental integration, as well as other data, a very robust approach to data modelling is needed to satisfy the sheer complexity and demand for maximum usability involved in research.

We understand that data modelling involves determining which data elements are to be stored and how they are to be stored, including their respective relationships as well as constraints. The underlying structure as well as definitions of a data model indicate how the data can be stored, how the values will be interpreted, and how easily that data can be queried.

Significant efforts have been made to date in order to address some of the issues and challenges involved in data standardisation and effective data modelling – both of which are required for data harmonisation. This has led to a number of widely accepted and prominent CDMs, such as OMOP, i2b2, and PCORnet – all of which have been well-described in Jeffrey G. Khan’s work on data model harmonisation – the “All of Us” Research Program, to be specific.

We’ve handpicked a specific section from the publication to help you better understand the CDM landscape:

The PCORnet CDM is actively supported by all networks in the Patient Centered Outcomes Research Institute (PCORI), so it has a wide base of support. In fact, more than 80 institutions have already transformed their data into this exact model, which was originally derived from the Mini-Sentinel data model, which in turn, has had a steadily increasing uptake in data analysis pertaining to claims.

PCORnet CDM boasts a traditional relational database design – i.e. each of the 15 tables corresponds to a clinical domain, such as labs, medications, diagnosis, etc. The tables feature multiple columns, including the table key (which includes encounter identifier and patient identifier, e.g.) and additional details (medical frequency, e.g.). As PCORnet CDM received more updates, new clinical elements could be added – for instance, changes in data presentation (smoking status, e.g.) and new domains (lab values, e.g.).

Another notable CDM is i2b2 or ‘Informatics for integration biology in the bedside’. It was initially developed in 2004 through a grant by the NIH (National Institutes of Health), and to this day, is very popular. At present, i2b2 is used in well over 200 sites across the world, including multiple large-scale networks, such as the NCATS’ ACT (Accrual to Clinical Trials) network. It integrates the star-schema format (pioneered by General Mills more than three decades ago) and is a common choice for retail data warehouses.

The i2b2 star-schema incorporates one large “fact” table which contains individual observations. It’s a narrow table containing several rows per patient encounter. Ontology tables, for example, offer a window into the data, which are typically developed by local implementers.

The underlying data model is only modified once core features have been added to the platform.

OMOP (Observational Medical Outcomes Partnership) was developed as a shared analytics model from day one. It has been adopted by the OHDSI (Observational Health Data Sciences and Informatics, pronounced “Odyssey”) international consortium, a diverse collaborative effort dedicated to improving data research and quality.

This CDM is now increasingly being utilised – at present, over 90 sites around the world – owing to OHDSI’s fairly large community and plethora of analytical tools. OMOP utilises a hybrid model providing domain tables in a similar vein to PCORnet, including a “fact” table which contains individual atomic observations (along the same lines as i2b2).

However, the OMOP schema is fairly more complicated than PCORnet’s, where some domain tables are actually derived values for certain analytical purposes (drug_era or visit_cost, e.g.). Unlike PCORnet, however, OMOP offers metadata tables which contain information on terminology & concept relationships (similar to the ontology system in i2b2).

While each of the above CDMs offer creatively executed solutions to support research, they do come with limitations of their own. Therefore, organisations engaged in strategising their data harmonisation efforts and implementing data warehouses should choose a CDM based on their individual research needs.

With that said, more and more sites are realising that to participate in multi-site research, they may very well need the support of all of the above data models in order to achieve data interoperability with their chosen collaborator.

Pronounced “Fire”, FHIR (Fast Healthcare Interoperability Resources) is a vital standard pertaining to interoperability created by the HL7 (Health Level 7) International Healthcare Standards organisation.

The standard consists of data formats and elements – referred to as “resources” – along with an API for exchanging EHRs. Perhaps, FHIR’s greatest benefit is that it outputs natively out of EHRs through different APIs which are required for regulatory purposes.

The FHIR community is an open and collaborative one, tirelessly working with governments, academia, healthcare, payers, EHR vendors, and patient groups to define a health data exchange specification commonly shared across all groups involved. It builds upon the previous work and standard, while still retaining enough flexibility to make the specification usable and understandable.

The majority of practical demonstrations of FHIR have proven that it is highly effective at interoperability in open ‘connect-a-thons’ (events aimed at proving the efficacy of the standard). Any organisation engaged in data organisation and harmonisation needs to sit up and take notice because FHIR really matters. Here’s why:

FHIR is unique when it comes to health IT standards as it has enjoyed very positive responses universally; from health systems, governments, and academics to pharma, payers, and most EHR vendors, if not all. In fact, a number of communities have come up with FHIR Accelerators to enrich the standard’s rapid progress and maturation. Most interestingly, FHIR is widely adopted by the system developers for whom it was originally designed. This has resulted in FHIR’s proliferation throughout the clinical community at large.

The translational research community stands to benefit exponentially from the generous investments clinical communities make who are already using FHIR. Instead of reinventing specific data standards around research, it would make more sense to leverage the structure, tooling, infrastructure, detail, and specification coming forward from the said clinical communities. Pending approval from the ONC (Office of the National Coordinator) for Health IT, regulations will demand that all EHRs support FHIR APIs, which will drastically simplify data access and delivery in a way that conforms to FHIR’s parameters. Naturally, this will do away with the need for complex data extraction and translation while creating FHIR-based repositories.

The NIH is actively investing in FHIR, not only for the exchange of EHRs but also for research purposes, from academia to industry. NIH is also encouraging its researchers to explore the use of FHIR’s standard to facilitate in the capturing, integration, and exchanging of clinical data for research, and to enhance capabilities around sharing that researched data.

FHIR’s modular data models carry a flexible payload and reusable “containers” which can easily be assembled into working systems. This model allows for the exchange of well-defined and structured data in discrete units.

As pointed out earlier, data harmonisation is a semi-automated process involving a set of activities which are customised according to a specific business model.

In order to effectively use the various data harmonisation frameworks and data harmonisation tools, it’s equally important to get a gist of how harmonisation of data works in itself:

First, the relevant data sources must be identified. Data can then be acquired from these sources, after which data sets are created.

The source of the data could be anything from market research to consumer research to business documents, for example. Or, all of the above.

A single schema must be created for the entire data to follow. The schema will contain all the required fields and validations.

Data is always ingested by a system as raw data. The ingested data must, therefore, be evaluated in terms of integrity and validity. Inaccurate, incorrect, or inconsistent parts of the data are then identified as per the schema and modified, if need be.

Cleaning of data is also required to maintain quality, consistency, and uniformity.

At this point, the defined schema is applied to the raw data and then harmonised data is obtained. Analyses must be carried out to ensure that the harmonised data complies with the quality standards, along with no loss to its accuracy and originality.

Harmonisation will always occur according to a business’s unique needs and the processes it follows.

At last, the harmonised data is deployed on the system and made available to all parties or groups involved for further processing.

The up-to-date data can be accessed across all organisational levels, and modified as and when needed. Teams and departments do not need to develop their own datasets, which can be very expensive, error-prone, conflicting, and time-consuming.

While being familiar with some of the specific data harmonisation tools or data harmonisation frameworks available, it is also important to know the different categories of tools, techniques, and frameworks available. For example:

Data integration tools are software-based tools which help in combining and transforming data from a variety of sources and formats, into a single unified data set.

These tools can help in performing many different tasks – for example, data transformation, data extraction, data mapping, data loading, and data synchronisation.

Data integration tools are designed to help handle different kinds of data like semi-structured, structured and unstructured data, as well as streaming data. Common examples include Apache NiFi, SQL Server Integration Services by Microsoft, and Pentaho Data Integration.

Data integration tools are also software-based and designed to help in the assessment, monitoring, and improvement of data when it comes to completeness, accuracy, consistency, validity, and timeliness of that data.

They can help in identifying and resolving data errors like duplicates, outliers, missing values, and other inconsistencies. Data quality tools also help to enrich, standardise, and normalise data; for example, by adding metadata, converting units, and applying specific rules.

Some common examples are Talend Data Quality, InfoSphere QualityStage by IBM, and OpenRefine.

These software-powered tools are designed to help in establishing and visualising the various connections and transformations between the data elements of different data sources as well as formats.

Data mapping tools also help in defining and managing the rules and logic around the transferring, conversion, and validation of data. In addition, they can help to automate and monitor the entire data mapping process, generating documentation and reports on the data mapping results.

Examples include Informatica PowerCenter, CloverDX Data Mapper, and Altova MapForce.

With data visualisation tools, you can present and explore data in multiple graphical and interactive forms – e.g. maps, graphs, charts, dashboards, and stories.

Data visualisation tools are designed to aid you in communicating and sharing data insights with your audience, such as your coworkers, managers, and customers. They can also help you in discovering and analysing the trends, patterns, and outliers associated with your data.

Examples: Qlik Sense, Power BI, and Tableau.

Data modelling tools are used in the designing, creation, and managing of the data structure and relationships. They can help to define and document the various data attributes, types, elements, rules, and constraints.

Data modelling tools can also help in generating and executing the code and scripts needed to create as well as modify your data tables, keys, views, and indexes.

Typical examples of such tools include: Oracle SQL Developer, PowerDesigner, Data Modeler, and ER/Studio Data Architect.

As we have learned, there are many options available when it comes to choosing specific data harmonisation frameworks and data harmonisation tools.

There’s no “good” or “bad” when it comes to using the best ones for harmonising data in 2024. We’re going to quickly reiterate that the choice, ultimately, comes down to your specific business goals and the outcomes you wish to achieve via data harmonisation.

In any case, you’re halfway there by choosing the right tools and frameworks!

The most commonly used instruments for assessing autism spectrum disorder (ASD) include:

ADOS (Autism Diagnostic Observation Schedule): This is a semi-structured observation tool that assesses communication, social interaction, and play behaviors in individuals with suspected ASD. It is considered the gold standard for diagnosing ASD in young children.

ADI-R (Autism Diagnostic Interview-Revised): This is a structured interview conducted with parents or caregivers to gather information about the individual’s developmental history, social communication, and repetitive behaviors. It is often used in conjunction with the ADOS for diagnosis.

AQ (Autism Quotient): This is a self-report questionnaire that assesses autistic traits in adults. It is not a diagnostic tool but can be used as a screening measure.

CHAT (Checklist for Autism in Toddlers): This is a screening tool for early identification of ASD in children aged 18-30 months.

BAPQ (Behavior Assessment for Preschoolers): This is a standardized assessment tool for preschool-aged children that measures social skills, communication, and adaptive behaviors.

The choice of instrument depends on the age of the individual, the purpose of the assessment, and the specific needs of the clinician. Here are some comparisons which you can do with Harmony:

The most commonly used instruments for assessing alcohol consumption and abuse include:

AUDIT-10 (Alcohol Use Disorders Identification Test): A 10-item questionnaire that assesses alcohol consumption, dependence, and risk behaviors.

MAST (Michigan Alcoholism Screening Test): A 24-item questionnaire that assesses alcohol dependence and abuse.

ESPAD (European School Survey Project on Alcohol and Drugs): A survey that collects data on alcohol and drug use among adolescents.

ASSIST (Alcohol, Smoking and Substance Involvement Screening Test): A 12-item questionnaire that assesses alcohol, tobacco, and other substance use.

The choice of instrument depends on the purpose of the assessment, the age and demographic characteristics of the individual, and the specific needs of the researcher or clinician.